Understanding Muon

Chapter 1: Into the Matrix

July 21, 2025

"To understand Muon, the first step is to enter the Matrix."

—Not quite Morpheus

- Chapter 1: Into the Matrix (speedy Muon explainer based on common confusion points)

- Chapter 2: Source Code (annotated PyTorch implementation + usage advice)

- Chapter 3: Weight Regulation (MuonClip, our work, and future directions)

Now let's dive in... to the Matrix. (Or expand more context on Muon.)

Neo, The One (in which we see that optimizers like to make updates of size one)

"You take the blue pill? The story ends, and you believe that a gradient's entries are unrelated numbers."

"You take the red pill? You stay in Wonderland, and I show you how deep the rabbit hole goes."

Let's start with a thought experiment. If the gradient is 10x as large, should an optimizer step 10x as far?

Almost the opposite:

- If the loss is $y=(x/100)^2$, the gradients are small, but the model should take big steps.

- If the loss is $y=(100x)^2$, the gradients are big, but the model should take small steps.

As a preview of what is to come, many optimizers therefore take some form of the following advice:

"No matter how giant or tiny the gradient is, move a fixed distance of one."

But elementwise optimizers have a limited menu for how to take this advice. The simplest way is SignSGD:

- Instead of SGD: $W_{n+1} = W_n - \eta \cdot G_{n+1}$

- Do SignSGD: $W_{n+1} = W_n - \eta \cdot \text{sign}(G_{n+1})$

where $\eta > 0$ is the learning rate. SignSGD turns every number in the gradient into either $1$ or $-1$.

The Adam optimizer actually reduces to SignSGD when its momentum is turned off (Anthology, Story 1). With momentum on, Adam's updates are akin to $\frac{m_1}{\sqrt{m_2}}$, where $m_1$ tracks $G$, and $m_2$ tracks $G^2$ (elementwise square). Its updates resemble $\frac{G}{\sqrt{G^2}} = \text{sign}(G)$, and each entry of its updates hovers near one. Yet Adam looks at entries to step distance one, even though the entries link indirectly to what the weight matrix does in a neural network.

But Muon... it has entered the Matrix. It does not see entries of a gradient matrix. It sees the matrix itself.

And that will be very useful so that Muon can exploit a more sophisticated way to measure "distance one."

The Fundamental Tension (in which a norm defines "size one" for a weight update)

"When I made the Matrix, I did not force it to choose a measure of size," began the Architect. "I was idealistic then. So for you I chose a norm $\| \cdot \| : \mathbb{R}^{n \times m} \to \mathbb{R}$, and it is how you came to be."

Why is distance one a good compromise? Because optimizers are constantly struggling against the fundamental tension: they want the loss to go down far, but they don't want to disturb the model's output too much; the gradient is only accurate exactly where it is measured. To step a finite distance is always a leap of faith.

But how should we measure how far we are leaping? This is a question Muon innovates on.

Suppose we have single linear layer, $y = Wx$. If we update the weights to be $W + \Delta W$, then how much can the output $y$ change? We'd like a measure of size called $\| \Delta W \|$ where we'd know that $\| y \|$ wouldn't change by more than $\| \Delta W \| \| x \|$. Then even though we actually care about controlling output change, we could get away with controlling the weight update size. So we arrive at a compromise:

- Move toward the negative gradient as far as possible (aka minimize an inner product, $\underset{\Delta W}{\text{argmin}} \, \langle G, \Delta W \rangle$)

- While not stepping farther than distance one (aka norm constraint, $\text{s.t. } \| \Delta W \| \leq 1$)

The compromise says to maximize the alignment between the gradient $G$ and the weight update $\Delta W$, but not step farther than distance one. Otherwise the argmin would flee forever away like $\Delta W = -c \cdot G$ as $c \rightarrow \infty$.

But how do we measure the size $\| \Delta W \|$?

To see, forget that a matrix is made of numbers. Forget that a matrix is called a matrix.

A linear transformation is all there is, $A : \mathbb{R}^{d_\text{in}} \to \mathbb{R}^{d_\text{out}}$. The transformation sends vectors to vectors.

Its inputs are lists of numbers, and we'd like to measure $(\pm 1, \dots, \pm 1)$ as size $1$ no matter how long the list is. That's because we'd like activations in our neural network to have entries around $1$ or $-1$. It's what $\mu\text{P}$ recommends for consistent dimension scaling, and it's the most expressive range for floating point numbers.

But the regular $\ell_2$ norm $\| v \|_2^2 = v_1^2 + \cdots + v_n^2$ will not do, because it judges all-ones vectors differently depending on dimension. Instead we use the root-mean-square norm $\| v \|_\text{RMS}^2 = \tfrac{1}{d} (v_1^2 + \cdots + v_n^2)$.

How much can $A$ change vectors? Imagine the linear transformation takes all vectors $v$ with $\| v \|_\text{RMS} = 1$, and it bends this sphere into an oblong shape. Whichever vector is made the largest, its size will be $A$'s size: $$\| A \|_{\text{RMS} \rightarrow \text{RMS}} := \underset{v : \| v \|_\text{RMS} = 1}{\max} \| A v \|_\text{RMS}.$$

This measure is the $\text{RMS}$ to $\text{RMS}$ norm, the maximum stretch the $\text{RMS}$ norm can detect. It is a measure of size that accounts for what the matrix actually does in a neural network: transform vectors. And like we wanted, a weight update $\Delta W$ causes at most an activation change $\|\Delta y\|_{\text{RMS}} \leq \| \Delta W \|_{\text{RMS} \rightarrow \text{RMS}} \|x\|_{\text{RMS}}$.

So we arrive back to our compromise, now armed with a specific norm. But what is the solution to

$$\underset{\Delta W \text{ s.t. } \| \Delta W \|_{\text{RMS} \rightarrow \text{RMS}} \leq 1}{\text{argmin}} \langle G, \Delta W \rangle?$$

We can reformulate the constraint using the spectral norm, which measures matrix size via the $\ell_2$ norm: $$\| \Delta W \|_{\text{RMS} \rightarrow \text{RMS}} = \sqrt{\frac{d_\text{in}}{d_\text{out}}} \| \Delta W \|_{\ell_2 \rightarrow \ell_2}.$$

And the spectral norm $\| \cdot \|_{\ell_2 \rightarrow \ell_2}$ will be very useful for us because of its connection to singular values.

Every matrix $G \in \mathbb{R}^{d_\text{out} \times d_\text{in}}$ has a singular value decomposition $A = U \Sigma V^T$, where $U$ and $V$ are orthogonal—meaning they preserve a vector's norm—and $\Sigma$ is diagonal with nonnegative entries $\sigma_1 \geq \sigma_2 \geq \cdots \geq \sigma_n \geq 0$, called the singular values. The spectral norm of $A$ is its largest singular value $\sigma_1$. Here's how we can use that.

Expanding out the SVD, we can write $G = \sum_k \sigma_k u_k v_k^T$, where $u_k$ and $v_k$ are orthonormal vectors. Then

$$\underset{\| \Delta W \|_{\ell_2 \rightarrow \ell_2} \leq 1}{\text{argmin}} \langle G, \Delta W \rangle = \underset{\| \Delta W \|_{\ell_2 \rightarrow \ell_2} \leq 1}{\text{argmin}} \sum_k \sigma_k (u_k^T \Delta W v_k).$$

Now we can argmin each singular vector pair independently because they are orthogonal. For $\Delta W$'s spectral norm not to exceed $1$, each singular value has a budget of $1$. So use it up: set all the singular values to $1$. And keep the singular vectors $u_k$ and $v_k$ the same to maximize alignment. In other words, the solution is $$\Delta W = -UV^T$$ if $G = U \Sigma V^T$. This operation is called orthogonalizing. Finally we multiply by $\sqrt{d_\text{out} / d_\text{in}}$ to recover dimension-independence from the $\text{RMS}$ to $\text{RMS}$ norm. (More detail and full proof in Anthology, Story 2.)

So we've resolved the fundamental tension. We know the update step—but can we compute it?

Bend the Singular Values (in which we learn that odd polynomials edit singular values)

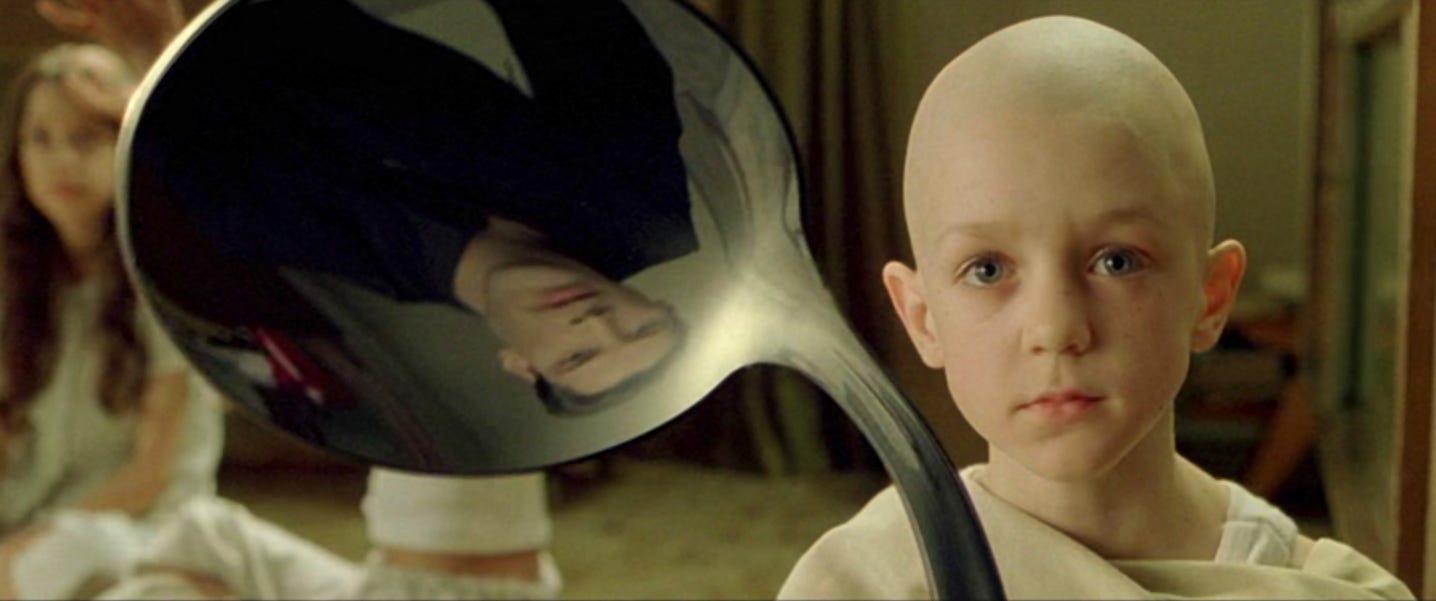

The bald boy said, "Do not try to bend the singular values, that's impossible. The direct SVD calculation $$G = U \Sigma V^T \mapsto U f(\Sigma) V^T$$ that sends every singular value $\sigma \mapsto f(\sigma)$ doesn't parallelize well on a GPU. Instead, only try to realize the truth... you don't need to see the singular values to bend them."

If we could somehow apply a function $f$ to the singular values ("bending the singular values"), then we'd be done: using $f(x) = 1$, we get $U f(\Sigma) V^T = U V^T$. But materializing the SVD isn't friendly to the GPU.

To bend singular values, there's a wonderful trick that Jeremy Bernstein devised in August 2024.

Take the matrix, and cube it! Suddenly, the matrix changes but is still recognizable: $$G^3 := (GG^T)G = (U \Sigma V^T V \Sigma U^T) U \Sigma V^T = U \Sigma^3 V^T.$$

We can take more odd powers, $G^{2k+1} = U \Sigma^{2k+1} V^T$. The odd powers commute straight into the singular values since $V^TV = I$ and $U^TU = I$. Linearity forms up any polynomial $p(x) = a_0x + a_1x^3 + \cdots + a_kx^{2k+1}$ that bends singular values as directly as it bends a single number, without seeing a single one of them: $$\underbrace{p(U \Sigma V^T)}_{\text{acts on matrix}} = \underbrace{U p(\Sigma) V^T}_{\text{acts on numbers}}.$$

With this new power, we do two things. We squash the gradient's singular values into the range $[0, 1]$ by dividing by something certainly bigger than the spectral norm, say $\| G \|_F = \sqrt{\sum_{i,j} G_{ij}^2}$. Then again and again and again we apply some polynomial that will push numbers in the range $[0, 1]$ toward $1$.

What turns out to matter is how fast the polynomial can push very small $\sigma \ll 1$ toward $1$. Muon uses a $5$ steps of a speedy polynomial with high linear coefficient $3.4445x$, and not converging to $1$ turns out fine in practice.

And let's see what Muon's polynomial does to a real gradient matrix, visualized with singular value histograms:

That's the surprising empirical fact: many gradient singular values are very small. Muon views them equally.

So we've followed the gradient as far as it will go, but only stepping distance $1$. This compromise leads to the map $G \mapsto \sqrt{\tfrac{d_\text{out}}{d_\text{in}}} \; U V^T$ for $G = U \Sigma V^T$, which we compute by iterating odd polynomials since they act directly on the singular values. And the distance we're catering to is fundamentally matrix-based. Controlling $\Delta W$ this way controls how much the optimizer update can affect the model output—activation RMS changes by at most $1$.

To recover Muon, we add back the smoothness of momentum, constructing $M_{n+1} = \beta M_n + (1 - \beta) G_{n+1}$ for some parameter $\beta$, typically $0.95$. Muon proposes its core update as orthogonalized momentum:

$$\Delta W_n = \sqrt{\frac{d_\text{out}}{d_\text{in}}} \text{orthogonalize}(M_n).$$

The spoon is bent without having even looked at it. In the next chapter, we'll go through Muon's PyTorch code line-by-line so that you can't get stuck understanding it. And we'll advise on learning rate and when to use Muon.

Chapter 2: Source Code →